For an old nvidia it might be too much energy drain.

I was also using the integrated intel for video re-encodes and I got an Arc310 for 80 bucks which is the cheapest you will get a new card with AV1 support.

I like sysadmin, scripting, manga and football.

For an old nvidia it might be too much energy drain.

I was also using the integrated intel for video re-encodes and I got an Arc310 for 80 bucks which is the cheapest you will get a new card with AV1 support.

Better than anything. I run through vulkan on lm studio because rocm on my rx 5600xt is a heavy pain

Ollama has had for a while an issue opened abou the vulkan backend but sadly it doesn’t seem to be going anywhere.

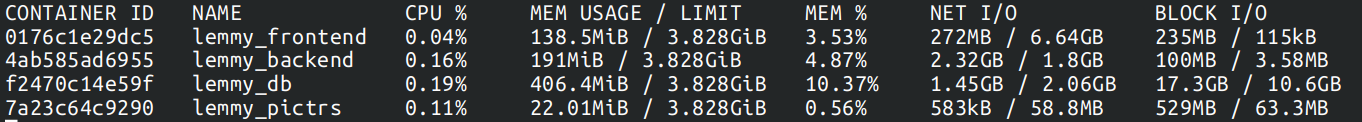

Put up some docker stats in a reply in case you missed it on the refresh

For the whole stack in the past 16 hours

# docker-compose stats

Depends on how many communities do you subscribe too and how much activity they have.

I’m running my single user instance subscribed to 20 communities on a 2c/4g vps who also hosts my matrix server and a bunch of other stuff and right now I mostly see peaks from 5/10% of CPU and RAM at 1.5GB

I have been running for 15months and the docker volumes total 1.2GBs A single pg_dump for the lemmy database in plain text is 450M

Yep I also run wildcard domains for simplicity

I do the dns challenge with letsencrypt too but to not leak local dns names into the public I just run a pihole locally that can resolve those domains

What is your budget?

i5-7200U

That is Kaby Lake and seems to support up to HEVC for decoding which might be enough for you

https://en.wikipedia.org/wiki/Intel_Quick_Sync_Video#Hardware_decoding_and_encoding

For a bit of future proof you might want to check out something Tiger Lake or newer since it seems like they support AV1 decoding in hardware.

Arch Linu, Kubuntu, Supergrub, Tails, Kali, Windows

I just stop my containers and tar gzip their compose files, their volumes and the /etc folder on the host

Assuming you have all of them under a folder, I just run this lol

for f in *; do

echo "$f";

git -C "$f" pull;

git -C "$f" submodule update --recursive --remote;

echo "";

echo "#########################################################################";

echo "";

done

For archival purposes software encoding is always more efficient size wise.

I am also waiting for an Arc to arrive to plug into my jellyfin box.

Hardware encoding is fast yeah but wont save me disk space.

Still not sure whether I will upgrade to 9900x or 9700x from my 3700x

I’m recently:

So going harder on the stronger CPU rather than an expensive the GPU seems to be the answer for me. If I gamble on proper rocm support for some AI workloads and fail at least I could run some casual stuff using the CPU device.

From what I understand its still restrained by the server in real time so downloading a 2 hour movie would still take two hours 🥲

Yeah, I have been eyeing upgrades to get avx512 anyway because lately I have been doing very heave very low preset av1 encodes but when they are a dick about it I just feel like postponing it.

lol for the past 15 years I have “rebuilt” my desktop every 5 years but I didn’t expect the would try to force me out of my 7 3700x right on the date

Your connection is 40MB/s I assume

5e is capable of getting the full 1Gbps of my connection so I easily see over 90MB/s. That being said I bought a big 100m bulk years ago and have been clipping it myself with care.

If you were indeed using leftover/ free cables of cuestionable quality it indeed could be a reason for poor perfomance

Is x266 actually taking off? With all the members of AOmedia that control graphics hardware (AMD, Intel, Nvidia) together it feels like mpeg will need to gain a big partner to stay relevant.