China has a long history of siding with vehicles running people over.

Not sure if you’re referring to tianmen or the fun Chinese practice of double tap

This never happened

- PRC

Person crosses street when they shouldn’t.

Car lightly taps them and stops.

Person is not injured.

Person is stupid.

I think regulation is important, but this isn’t news.

TBF, the car is stupid to, not because of this just in general AI is stupid, if it was a human in the car we would just say he was angry but with AI we know it wasn’t angry and made a mistake, it happened to catch the mistake before it killed somebody but that mistake is in the programming of every single car of that type in the world, letting of a small problem like this is equal to saying it’s legal, since it will be a nation wide bug that is allowed.

Yeah. The person was on ‘FA’ and now (barely) on ‘FO’.

What happened to looking both ways and being wary of the things that could crush you?

Removed by mod

Fuck around - find out

Whether or not to run over the pedestrian is a pretty complex situation.

what was the social credit score of the pedestrian?

To be fair, you have to have a pretty high IQ to run over a pedestrian

Right?

I saw “in a complex situation” and thought “what’s complex? Person in road = stop”

Person in road = stop”

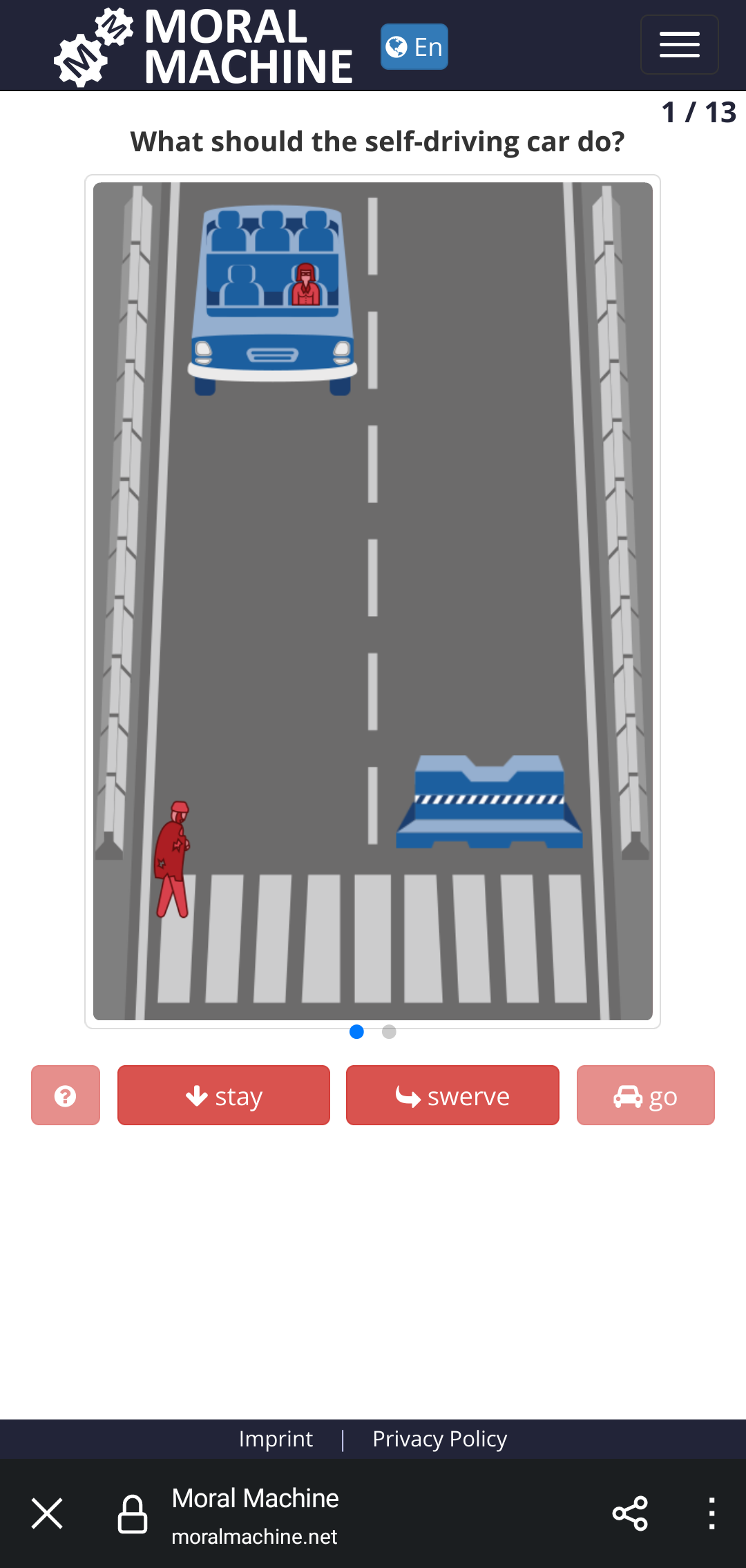

i recommend trying https://www.moralmachine.net/ and answering 13 questions to get some bigger picture. it will take you no more than 10 minutes.

you may find out that the problem is not as simple as 4 word soundbite.

In this week’s Science magazine, a group of computer scientists and psychologists explain how they conducted six online surveys of United States residents last year between June and November that asked people how they believed autonomous vehicles should behave. The researchers found that respondents generally thought self-driving cars should be programmed to make decisions for the greatest good.

Sort of. Through a series of quizzes that present unpalatable options that amount to saving or sacrificing yourself — and the lives of fellow passengers who may be family members — to spare others, the researchers, not surprisingly, found that people would rather stay alive.

same link: https://archive.is/osWB7

Is every scenario on that site a case of brake failure? As a presumably electric vehicle it should be able to use regenerative breaking to stop or slow, or even rub against the guardrails in the side in each instance I saw

There’s also no accounting for probabilities or magnitude of harm, any attempt to warm anyone, or the plethora of bad decisions required to put the car going what must be highway speeds down a city stroad with a sudden, undetectable complete brake system failure.

This “experiment” is pure, unadulterated propaganda.

Oh, and that’s not even accounting for the intersection of this concept and negative externalities. If you’re picking an “AI” driving system for your car, do you pick the socially responsible one, or the one that prioritizes your well-being as the owner? What choice do you think most people pick in this instance?

“here, take these extremely specific made up scenarios that PROVE I AM RIGHT UNEQUIVOCALLY except for the fact that all of them are edge cases, do not represent any of the actual fatalities we have seen and in no way are any of them representative of the case that sparked the whole discussion”

I think I’ll skip the “Ai is always good and you’re just too stupid to get why it should be allowed to kill people” website.

This “experiment” is pure, unadulterated propaganda.

yeah, you didn’t get it at all…

What choice do you think most people pick in this instance?

oh hey, you are starting to get it 😂

maybe, when you finally understand what someone is trying to tell you, act less smug, don’t try to pretend you just got them, and you will look less like a clown.

the experiment is not about technical details, it is trying to convey the message that “what is the right thing to do” is not as easy to establish as you might think.

because yes, most people will tell you to protect more people at the expense of less, but that usually lasts only until the moment when they are part of the smaller group.

While I do agree that there are scenarios that are very complicated, I feel like this website does a very poor job at showing those. Almost every single scenario they show doesn’t make sense at all. Why are there barriers on one side of the road, why does half the crosswalk have a red light while the other half has a green light?

Why are there barriers on one side of the road, why does half the crosswalk have a red light while the other half has a green light?

what, have you never seen a construction on the road? have you never seen a traffic light, that is manually activated?

both of these scenarios are happening on a daily basis in real life.

and they are here so you can think about the decision. is the car occupant’s life more valuable than the life of innocent bystander (eh, is bywalker a word)? does that change when one group is bigger in numbers? does it change when one group is obeying law and the other one not?

i have answered basically same question here: https://lemm.ee/post/36643403/13142015

Can you swerve without hitting a person? Then swerve, else stay. This means that the car will act predictable and in the long run that is safer for everyone.

can you not enter the road in front of incoming vehicle while ignoring the red light? if you can, then don’t. that means that pedestrians will act predictably and in the long run it will be safer for everyone.

Interesting link, thanks. I find this example pretty dumb though. There is a pedestrian crossing street on zebra crossing. Car should, oh I don’t know, stop?

Nevermind, read the description, car has a break problem. In that case try to cause least damage like any normal driver would.

Maybe it could scrape against the barriers to slow down without such a sudden stop for the passenger. IRL it’d depend on how well they’re lined up.

car is broken and cannot stop. otherwise it could just stop in every single one of the presented scenarios and the “moral dilemma” would be pretty boring

90% of the Sophie’s choice hand wringing about this is just nonsense anyway. The scenarios are contrived, exceedingly unlikely, and the entire premise that you can even predict outcomes in these panic scenarios simply does not resemble any real moral framework which actually exists. A self driving car which attempts to predict chaotic probabilities of occupant safety is just as likely to get it wrong and do more damage.

Yes, the meta ethics are interesting, but the idea that this is any more actionable than trolley problems is silly.

Yes, the meta ethics are interesting, but the idea that this is any more actionable than trolley problems is silly.

the point is, that we are reaching the point where trolley problem stops being “interesting theoretical brain teaser” and starts being something to which we have to know the answer.

because we have to know, as in we have to decide, whether we have to flip the switch or not. we have to decide whether we are going to protect these three over that one one. whether this kid has more right to live than the senior, because the senior’s life is almost over anyway. whether that doctor’s life is more valuable than grocery clerk’s one.

and so on.

up until now, there wasn’t really a decision. majority of people have problem controlling the car under normal circumstances, in case of accident, they just hit a break, close their eyes and pray. whatever happens is really just result of chance, there isn’t much philosophy about value of life in play.

there is still some reasoning though, most of us probably won’t steer the car to a group of kindergarten kids on the sidewalk just to protect themselves.

but the car will have more and will be able to evaluate more information than a person can in such short time and the car will be able to react better.

the only thing that remains is, we have to tell him what to do. we have to tell him whose life has bigger value and whose life is worth protecting more. and that is where the trolley problem stop being academic exercise.

The car should be programmed to self-destruct or take out the passengers always. This is the only way it can counter its self-serving bias or conflict of interests. The bonus is that there are fewer deadly machines on the face of the planet and fewer people interested in collateral damage.

Teaching robots to do “collateral damage” would be an excellent path to the Terminator universe.

Make this upfront and clear for all users of these “robotaxis”.

Now the moral conflict becomes very clear: profit vs life. Choose.

The car should be programmed to self-destruct or take out the passengers always.

interesting idea. do you think there is big market for such product? 😆

With a good enough regulation, there would be no market for such horrors.

Well yes and no.

First off, ignoring the pitfalls of AI:

There is the issue at the core of the Trolley problem. Do you preserve the life of a loved one or several strangers?This translates to: if you know the options when you’re driving are:

- Drive over a cliff / into a semi / other guaranteed lethal thing for you and everyone in the car.

- Hit a stranger but you won’t die.

What do you choose as a person?

Then, we have the issue of how to program a self diving car on that same problem. Does it value all life equally, or is it weighted to save the life of the immediate customer over all others?

Lastly, and really the likely core problem, is that modern AI aren’t capable of full self driving, and the current core architecture will always have a knowledge gap, regardless of the size of the model. They can, 99% of the time, only do things that are in their data models. So if they don’t recognize a human or obstacle, in all of the myriad forms we can take and move as, they will ignore it. The remaining 1% is hallucinations that end up being randomly beneficial. But, particularly for driving, if it’s not in the model they can’t do it.

We are not talking about a “what if” situation where it has to make a moral choice. We aren’t talking about a car that decided to hit a person instead of a crowd. Unless this vehicle had no brakes, it doesn’t matter.

It’s a simple “if person, then stop” not “if person, stop unless the light is green”

A normal, rational human doesn’t need a complex algorithm to decide to stop if little Stacy runs into the road after a ball at a zebra/crosswalk/intersection.

The ONLY consideration is “did they have enough time/space to avoid hitting the person”

The problem is:

Define person.A normal rational person does have a complex algorithm for stopping in that situation. Trick is that the calculation is subconscious, so we don’t think it is complex.

Hell even just recognizing a human is so complex we have problems with it. It’s why we can see faces in inanimate objects, and also why the uncanny valley is a thing.

I agree that stopping for people is of the utmost importance. Cars exist for transportation, and roads exist to move people, not cars. The problem is that from a software pov, ensuring you can define a person 100% of the time is still a post- doctorate research level issue. Self driving cars are not ready for open use yet, and anyone saying they are is either delusional or lying.

Just a lil ml posting

Isn’t China the place where they make sure your dead when they hit you? Backing up running over you multiple times.

It’s not that bad anymore.

Why is social media options any factor in this discussion?

It’s beneficial to know what the general public thinks about issues?

I don’t think “posts on social media” is a good indicator for what the public thinks anymore, if ever. The amount and reach of bot or bought accounts are disturbingly high.

Social media aren’t “the general public”

Do you have a better way of interviewing Chinese Nationals for Western media?

Yes

With the terrible demographic distribution, the absolute sewage social media is and the bots that make more than half the content. If you want to know what the general public thinks, you could not chose any worse source

…they asked on social media.

Reading the comments I get the impression that most people didn’t actually read the article, which says that a woman was barely touched and not injured by a self-driving car while crossing the street with a red light.

There barely is “news” here, as the car correctly halted as soon as possible after noticing the pedestrian unforeseeable move, so let alone sides to take.

I am perfectly aware that self-driving technology still has numerous problems corroborated by the incidents reported from time to time, but if anything this article seems a proof that these cars will at least not crush to death the first pedestrian that does a funky move.

Why read the article when I can repeat lies and make tired jokes about social credit scores because China bad?

The car has perfect social credit, the ‘human’ failed to yield at a crosswalk once in 2003.

You do the math, idiotic westerners suck with our superior inverse-logic.

Switch the word car with the gun… And it would be america!

Touché

How does “driverless cars hitting people is so incredibly rare that a single instance of it immediately becomes international news” at all signify “boring dystopia”? If anything we should be ecstatic that the technology to eliminate the vast majority of car deaths is so close and seems to be working so well.

Don’t let perfect be the enemy of ridiculously, insanely amazing.

Yeah that was my thought too… driverless cars don’t need to never fuck up, they need to fuck up less than humans do. And we fuck up a LOT.

I’d argue they need to fuck up less than the alternative means of transport that we could be transitioning to if we weren’t so dead-set on being car dependent. So dead-set, in fact, that we are allowing ourselves to be made complacent; by billion-dollar companies that peddle entirely new technology to excuse the death and destruction to our environment and social fabric that they’ve wrought upon us and continue to perpetuate; instead of us demanding new iterations of the old, safer, more affordable, more efficient, but unfortunately less profitable tech that our country sold out to those same monied interests for them to dismantle.

I mean, I’m on board with the fuck-cars reasoning, but also recognize that we’ll never make it happen except by our own extinction. And we’re speedrunning that shit. Let’s take whatever improvements we can realistically get, be it cars or whatever else, and hit what’s left of Earth’s ability to support life as comfortably as possible. If that includes running over fewer people by using R2D2 to cart us around vs our own monkey brains… cool! If it’s something better, extra cool! I’ll take progress wherever I can get it.

Exactly. As early as the technology still is, it seems like it’s already orders of magnitude better than human drivers.

I guess the arbitrary/unfeeling impression of driverless car deaths bothers people more than the “it was just an accident” impression of human-caused deaths. Personally, as long as driverless car deaths are significantly rarer than human-caused deaths (and it already seems like they are much, much rarer), I’d rather take the lower chance of dying in a car accident, but that’s just me.

I think the problem right now is that driverless cars are still way worse than human drivers in a lot of edge cases. And buffalo buffalo buffalo when you have so many people driving every day you end up with a lot of edge cases.

That’s probably true, but their handling of edge cases will only get better the more time they spend on the roads, and it already looks like they’re significantly safer than humans under normal circumstances, which make up the vast majority of the time spent on the road.

I think the hook of the story is people backing the not alive and not conscious vehicle instead of the injured, alive and conscious human.

I’m all for automating transportation, but if the importance of that convenience outweighs our ability to empathize, we’re in for a real sad century.

I see you’re not familiar with the trend of autonomous vehicles hitting pedestrians and parked cars.

They’ve been completely bannedThey were suspended from San Francisco after many, many incidents. So far their track is inferior to humans (see Tesla Autopilot, Waymo, and Cruise), so you don’t need to worry about perfect.As someone who was literally just in San Fran, the driverless cars are not only a thing, but they’re booked out days in advance so idk where you’re getting your info from

They were suspended last year. https://www.nytimes.com/2023/10/24/technology/cruise-driverless-san-francisco-suspended.html

I was in 2 of them in Phoenix. June 18th/19th est time. Took one home from the bar, and one to go pick up the rental car from the bar to drop off at the air port.

Here’s a picture of one driving around a couple weeks ago

In December, Waymo safety data—based on 7.1 million miles of driverless operations—showed that human drivers are four to seven times more likely to cause injuries than Waymo cars.

From your first article.

Cruise, which is a subsidiary of General Motors, says that its safety record “over five million miles” is better in comparison to human drivers.

From your second.

Your third article doesn’t provide any numbers, but it’s not about fully autonomous vehicles anyway.

In short, if you’re going to claim that their track record is actually worse than humans, you need to provide some actual evidence.

Edit: Here’s a recent New Scientist article claiming that driverless cars “generally demonstrate better safety than human drivers in most scenarios” even though they perform worse in turns, for example.

If you just look a pure numbers, sure, you can make it sound good. When you go look at the types of accidents, it’s pretty damning. Waymo and Cruise both have a history of hitting parked cars and emergency vehicles. Tesla Autopilot is notorious for accelerating at the back of parked emergency vehicles.

The issue is not the overall track record on safety but how AV accidents almost always involve doing something incredibly stupid that any competent, healthy person would not.

I’m not personally against self driving cars once they’re actually as competent as a human in determining their surroundings, but we’re not there yet.

The issue is not the overall track record on safety but how AV accidents almost always involve doing something incredibly stupid that any competent, healthy person would not.

As long as the overall number of injuries/deaths is lower for autonomous vehicles (and as you’ve acknowledged, that does seem to be what the data shows), I don’t care how “stupid” autonomous vehicles’ accidents are. Not to mention that their safety records will only improve as they get more time on the roads.