Yes we could just shoot the severs, but what if the AI develops an anti-bullet shield, and then we shoot it with anti-bullet shield bullets, and then it creates an anti-bullet shield bullet bullet shield, and then, … and then …

Anyway, those kinds of kids reality free, imagination games of move and counter move were pretty cool when you were 8 years old.

Sorry got distracted a bit and just wanted to share, not related to the topic at hand.

this kinda happened with antitank weapons and highest iteration now is antitank missile paired with anti-anti-antitank missile. it’s rpg-30, russian wunderwaffe manufactured in symbolic numbers which only caused western militaries to develop countermeasures and was never used on large scale in any war

Not really what I meant, but interesting.

That reminds me of the star wars missile defense system, which according to some stories was never real and just intended to make the Soviets waste a lot of resources on trying to counter it.

which according to some stories

this was cope, it was a defense funding scam

Perhaps the same could be said of all

religionsof America.“look, not everything is a defense funding scam”

“well that is”

…it funded some fundamental research, fine by me

from what i understand, it was real as in r&d was real, but not much came out of this. in regard to nukes, maybe this programme provided some new fancy sensors. one of the logical responses to things like SDI is to increase number of ICBMs and decoys, which is exactly what soviets did, it doesn’t require developing similar ABM system. (nukes are ultimately tools of diplomacy, not really tools of war) of other things, we know that neutral particle beams work. some western weaponeers are toying with idea of high power lasers, but star wars era stuff were chemical lasers and what is used now are fiber lasers, so it’s not directly transferable

also Teller was insane and x-ray lasers never worked

@skillissuer Used in Ukraine. Possibly because they were running out of basic antiweapons.

all 20 of them?

everything that goes boom was used by now, but i doubt it’ll make a major difference

@skillissuer I have no idea how they could have been *needed* against Ukrainian tanks. My impression was that they didn’t have any tanks with active defense until they got EU/NATO models, but I could be wrong.

what makes me think that APSs are not a real factor either way is that everyone slaps ERA and anti-drone mesh on everything, which would interfere with radar. APSs historically had a huge blind spot on top, which is a bad thing in a war with drones. also, major user of APSs, IDF, slapped anti-drone grids on top of their tanks at the beginning of current Gaza war, so kinda probably that means that they are not really sure that it works good enough

Ukrainians have this thing https://en.wikipedia.org/wiki/Zaslin_Active_Protection_System but i’ve never seen something like Drozd/Arena used, nor western APSs. plenty of ERA everywhere tho

Relevant OOtS (last panel)

How many rounds of training does it take before AlphaGo realizes the optimal strategy is to simply eat its opponent?

I’m not spending the additional 34min apparently required to find out what in the world they think neural network training actually is that it could ever possibly involve strategy on the part of the network, but I’m willing to bet it’s extremely dumb.

I’m almost certain I’ve seen EY catch shit on twitter (from actual ml researchers no less) for insinuating something very similar.

[Taking the derivative of a function] oh fuck the function is conscious and plotting against us.

to be fair, assuming computers are like that because they hate all humans and want to fuck you up is basically true

it has been

0days since I last accused a web standard of being a basiliskI once tried to install a haskell package

that would explain

npm.

It’s called a function plot for a reason!

I’m almost certain I’ve seen EY catch shit on twitter (from actual ml researchers no less) for insinuating something very similar.

A sneer classic: https://www.reddit.com/r/SneerClub/comments/131rfg0/ey_gets_sneered_on_by_one_of_the_writers_of_the/

That’s it!

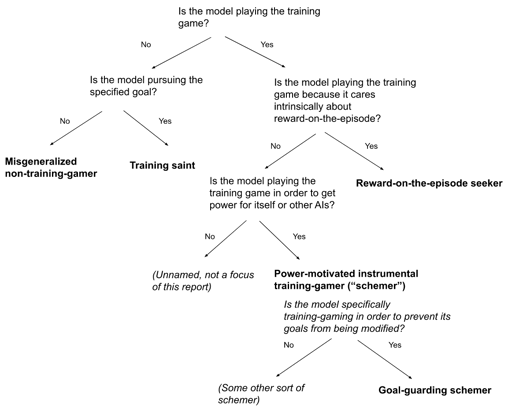

I conclude that scheming is a disturbingly plausible outcome of using baseline machine learning methods to train goal-directed AIs sophisticated enough to scheme (my subjective probability on such an outcome, given these conditions, is ~25%).

Out: vibes and guesswork

In: “subjective probability”

at one of the places i worked this kind of data was called assnumbers.

Sorry the thesis is that checks reality gradient descent might be consciously trying to avoid having its nefarious goals overridden?

what if right my spellcheck dictionary got so big it TOOK OVER makes u think

It is imperative that we first build a mathematical framework for guaranteeing benevolent thesauri before we travel this path any further!

Urban Dictionary’s Basilisk

If we grow AIs too big, say, bigger than the Moon, then well, the Moon could get jealous and mad at us.

Not enough people are preparing for this.