The only bit of excitement I’ve experienced about this, was when they announced it will be force-disabled in Europe, so I didn’t have to turn it off myself

Oh don’t worry, it’s coming to the eu

That’s horrible news

Tbf, it’s opt-in for now, so it isn’t that bad yet.

They need to release Apple Strength and Apple Dexterity to make the experience more complete

That would require Apple wisdom

I can’t afford Apple Wisdom.

No Apple Luck

Hard to argue against a nice quality build.

but Apple Karma is so wrecked, it’s hopeless

I feel like this can be generalized to AI in general for most people. I still don’t see much usefulness or quality in output in the scenarios where I’ve been exposed to AI LLMs.

I feel the same way about AI as I felt about the older generation of smartphone voice assistants. The error rate remains high enough that i would never trust it to do anything important without double checking its work. For most tasks, the effort that goes into checking and correcting the output is comparable to the effort I would have spent to just do it myself, so I just do it myself.

For programming it saves insane time.

Real talk though, I’m seeing more and more of my peers in university ask AI first, then spending time debugging code they don’t understand.

I’ve yet to have chat gpt or copilot solve an actual problem for me. Simple, simple things are good, but any problem solving i find them more effort than just doing the thing.

I asked for instructions on making a KDE Widget to get weather canada information, and it sent me an api that doesn’t exist and python packages that don’t exist. By the time I fixed the instructions, very little of the original output remained.

As a prof, it’s getting a little depressing. I’ll have students that really seem to be getting to grips with the material, nailing their assignments, and then when they’re brought in for in-person labs… yeah, they can barely declare a function, let alone implement a solution to a fairly novel problem. AI has been hugely useful while programming, I won’t deny that! It really does make a lot of the tedious boilerplate a lot less time-intensive to deal with. But holy crap, when the crutch is taken away people don’t even know how to crawl.

Seem to be 2 problems. One is obvious, the other is that such tedious boilerplate exists.

I mean, all engineering is divide and conquer. Doing the same thing over and over for very different projects seems to be a fault in paradigm. Like when making a GUI with tcl/tk you don’t really need that, but with qt you do.

I’m biased as an ASD+ADHD person that hasn’t become a programmer despite a lot of trying, because there are a lot of things which don’t seem necessary, but huge, turning off my brain via both overthinking and boredom.

But still - students don’t know which work of what they must do for an assignment is absolutely necessary and important for the core task and which is maybe not, but practically required. So they can’t even correctly interpret the help that an “AI” (or some anonymous helper) is giving them. And thus, ahem, prepare for labs …

If you’re in school, everything being taught to you should be considered a core task and practically required. You can then reassess once you have graduated and a few years into your career as you’ll now possess the knowledge of what you need and what you like and what you should know. Until then, you have to trust the process.

People are different. For me personally “trusting the process” doesn’t work at all. Fortunately no, you don’t have to, generally.

When AI achieves sentience, it’ll simply have to wait until the last generation of humans that know how to code die off. No need for machine wars.

This semester i took a basic database course, and the prof mentioned that LLMs are useful for basic queries. A few weeks later, we had a no-computer closed book paper quiz, and he was like “You can’t use GPT for everything guys!”.

Turns out a huge chunk of the class was relying on gpt for everything.

Yeeeep. The biggest adjustment I/my peers have had to make to address the ubiquity of students cheating using LLMs is to make them do stuff, by hand, in class. I’d be lying if I said I didn’t get a guilty sort of pleasure from the expressions on certain students when I tell them to put away their laptops before the first thirty-percent-of-your-grade in-class quiz. And honestly, nearly all of them shape up after that first quiz. It’s why so many profs are adopting the “you can drop your lowest-scoring quiz” policy.

Yes, it’s true that once they get to a career they will be free to use LLMs as much as they want - but much like with TI-86, you can’t understand any of the concepts your calculator can’t solve if you don’t have an understanding of the concepts it can.

One major problem with the current generation of "AI"seems to be it’s inability to use relevant information that it already has to assess the accuracy of the answers it provides.

Here’s a common scenario I’ve run into: I’m trying to create a complex DAX Measure in Excel. I give ChatGPT the information about the tables I’m working with and the expected Pivot Table column value.

ChatGPT gives me a response in the form of a measure I can use. Except it uses one DAX function in a way that will not work. I point out the error and ChatGPT is like, "Oh, sorry. Yeah that won’t work because [insert correct reason here].

I’ll try adjusting my prompt a few more times before finally giving up and just writing the measure myself. It does not have the ability to reason that an answer is incorrect even though it has all the information to know that the answer is incorrect and can even tell you why the answer is incorrect. It’s a glorified text generator and is definitely not “intelligent”.

It works fine for generating boiler plate code but that problem was already solved years ago with things like code templates.

I think a part of it is the scale of the data used to train it means there wasn’t likely much curation of that data. So it might complete the text using a wikipedia article, a knowledge forum post, a forum post that derailed the original topic, a forum post written by someone confidently wrong, a troll post, or two people arguing about the answer. In that last case, you might be able to get it to hash out the entire argument by asking if it’s sure about that after each response.

Which is also probably how it can correctly respond to “are you sure?” follow ups in the first place, because it was going off some forum post that someone questioned and then there was a follow-up.

It’s more complicated than that because it’s likely not just rehashing any one single conversation in any response, but all of those were a part of its training, and its training is all it knows.

If you don’t mind a few hundred bugs

Yup. We passed on a candidate because they didn’t notice the AI making the same mistake twice in a row, and still saying they trust the code. Yeah, no…

AI has absolutely wasted more of my time than it’s saved while programming. Occasionally it’s helpful for doing some repetitive refactor, but for actually solving any novel problems it’s hopeless. It doesn’t help that English is a terrible language for describing programming logic and constraints. That’s why we have programming languages…

The only things AI is competent with are common example problems that are everywhere on the Internet. You may as well just copy paste from StackOverflow. It might even be more reliable.

It doesn’t do anything that Emmett didn’t do 10 years ago.

Same. I’m not opposed to it existing, I’m just kind of… lukewarm about it. I find the output overly verbose and factually questionable, and that’s not the experience I’m looking for.

Even with other forms of generative AI, there are very few notable uses for it that isn’t just a gimmick/having fun with it, and not in a way achievable via other means.

Being able to add a thing to a photo is neat, but also questionably useful, when it is also doable with a few minutes of Photoshop.

I’ve a friend who claims it can be useful for scripts and quick data processing, but I’ve personally not had that experience when giving it a spin.

It’s a nice way to search for content or answers without all the ads that websites have nowadays. Of course, it’s only a matter of time until the AI/LLM responses are surrounded by (or embedded with) ads as well.

llm and search should not be in the same sentence

Or it much prefers to give you answers from “partners.” For example:

Me: How can I find a good set of headphones?

AI: A lot of people look for guides and reviews to find a good set of headphones. The important features to look for are… <insert overcomplicated nonsense here>. This can be overwhelming, so consider narrowing the search to a reliable product line like those by Beats (or whatever advertiser). Do you want some links to well-reviewed products?

Ick…

Lol, that reminded me that i saw a pair of “Beats x Kim Kardashian” y’know, audio engineer/designer KK finally giving the public a taste of real performance combined with chic luxury aesthetic 🥴

I still can’t wrap my head around the “Beats” craze. It’s like headphones didn’t already exist, lol!

Install Firefox and download uBlock Origin

Shitposting has never been easier though!

As a novice with little training, I’ve found AI to be helpful with running a server. Other than that, I depend on my own internet searches for info.

Yup. Photo cleanup was cool to try once, but I’ll never use it again. Removing stuff from photos with a single tap also bugs me a bit in general, I’m not sure it’s something we should make so easy. Message summaries are absolute shit and have already caused confusion for me. I’m not even talking about the proper notification summaries, just the auto-summaries in the preview lines of the whole iMessage list. A number of them have really fucked with me. For example, a friend asked me to FaceTime her in a few days, and the summary just said “FaceTime request.” And I was like “shit, did I miss a call?” As far as I can tell I can’t turn that off without disabling the entire AI setting.

I’m also not sure how to feel about all of Apple’s privacy talk when it comes to their AI features. They say certain features will stay on device, which is great, but for everything else, as far as I’ve noticed there is no mention of what goes to OpenAI’s servers, since their AI is still primarily powered by OpenAI. There’s actually no mention of OpenAI in any of the disclaimers or warnings I read when I first enabled it.

Apple Intelligence isn’t “powered by OpenAI” at all. It’s not even based on it.

The only time OpenAI servers are contacted is when you ask Siri something it can’t compute with Apple Intelligence, but even then it clearly asks the user first if they want to send the request to ChatGPT.

Everything else regarding Apple Intelligence runs either on-device or on their “Private Cloud Compute” infrastructure, which apparently uses M2 Ultra chips. You then have to trust Apple that their claims regarding privacy are true, but you kind of do that when choosing an iPhone in the first place. There’s some pretty interesting tech behind this actually.

I appreciate the clarification! I definitely misinterpreted the reporting about this, and clearly didn’t dig deeply enough. This makes me feel a bit better about using the non-ChatGPT features.

You can turn off (specifically) Message summaries in settings > Apps > messages > Summarize Messages

Well shit, thank you. I swear I searched for that setting before, but there it is!

iOS settings are like mirages I swear. Whoever designed the UI should be declared criminally insane

deleted by creator

Omg I really thought I was the only one. I can never find the setting I want. I think it’s one of those examples where Apple oversimplified to the point of confusion.

There’s no way OpenAI is letting Apple use them for free. So where is the money coming from? AI is the hot new thing and expensive to operate so I imagine Apple is paying quite a lot. There needs to be a new form of income or this wouldn’t make sense from a business perspective. I image there is data harvesting from the AI service.

I’d actually be surprised if Apple pays anything to OpenAI at the moment. Obviously running some Siri requests through ChatGPT (after the user confirms that’s what they want to do) is quite expensive for OpenAI, but Apple Intelligence doesn’t touch OpenAI servers at all (just Siri has ChatGPT integration).

Even then, there’ll obviously still be a lot of requests, but the problem OpenAI has is that they aren’t really in a negotiating position. Google owns Android and so most phones default to Gemini, instantly giving them a huge advantage in marketshare. OpenAI doesn’t have its own platform, so Apple having the second largest install base of all smartphone operating systems is OpenAI’s best chance.

Apple might benefit from OpenAI but OpenAI needs Apple way more than the other way around. Apple Intelligence runs perfectly fine (I mean, as “perfectly fine” as it currently does) without OpenAI, the only functionality users would lose is the option to redirect “complex” Siri requests to ChatGPT.

In fact, I wouldn’t be surprised if OpenAI actually pays Apple for the integration, just like Google pays Apple a hefty sum to be the default search engine for Safari.

Yeah, you nailed it. The latest reporting on this says that Apple isn’t paying them yet, because they think OpenAI will get more benefit out of just having their product in everyone’s faces:

Apple isn’t paying OpenAI as part of the partnership, said the people, who asked not to be identified because the deal terms are private. Instead, Apple believes pushing OpenAI’s brand and technology to hundreds of millions of its devices is of equal or greater value than monetary payments, these people said. Source.

I went into settings on my phone and disabled it immediately

I tried it one time, and it’s just as “slop” as the rest of generative AI. CEOs have no taste

CEOs have no

tasteclueTechbro CEOs are especially susceptible to the hypetrain and then want it implemented somehow, despite the tech not living up to the imaginary magic bullet they got from their superficial info.

I gotta be honest, the push notification summaries are more annoying than they are useful. Like. I’m going to read a text blurb of 100 or so characters. It’s an extra step to see the summary and then the actual message itself.

As the general rule I feel the same about more or less all of the “AI” that is available to consumers from the likes of Google, OpenAI, etc.

It just seems more like a different way to do things with digital assistants or search engines that we have already been able to do for years.

IMHO, they have pretty different use cases. For example, I can’t use a search engine to compose things.

From my experience iOS actually got dumber. At least the keyboard did, which is annoying. There’s a certain way how keys responded to what you typed which has been a thing since the first iPhone. But two updates ago or so, they butchered it completely (especially if you type in German), making texting pretty difficult at times. I’ve asked other users and some of them experience the same issues in that certain keys just do not want to get tapped sometimes because the algorithm expects something else, making hitboxes of unwanted keys way too big. Needlesly to say I’m not ready to trust Apple’s Intelligence just yet.

I experience this way too much. I have a nostaligia for when all of the problems I had with computers (broadly) were because I did something wrong… not because the computer is trying to fix something or guess something or anticipate something. Just let me type.

Yesterday, I typed out the letters of a word I wanted, and after typing a second word, I saw my iPhone “correct” the first word I typed to something else entirely. NO. Stop assuming I made a mistake. You cause more problems than you solve.

It’s crazy because they’ve tried to ‘fix’ something that wasn’t broken at all. It was one of the best features. Most users didn’t even notice there was an algorithm behind their keyboard. It just felt natural. But now it’s so aggressive, texting can almost feel like a warzone.

The iOS keyboard is one of the worst pieces of software I’ve ever used. It is actively hostile towards what I’m trying to type.

I have a theory that the key hit boxes slowly have error introduced to them over time.

Sort of like “my phone is slow” except not, because there’s no perceivable performance loss on iPhones other than battery degradation.

Is this a documented feature? In that it will modify the hit boxes for keys as you’re typing, based on likely next key press.

As far as I know it has been part of iOS since the first iPhone because Steve Jobs didn‘t think people would enjoy typing on a small touch screens otherwise. I am assuming all digital keyboards have something like that to make writing easier.

Daily iPhone user. Haven’t really noticed any difference. They really pushed how tightly integrated the experience would be, but honestly, I don’t really notice.

Maybe they integrated it so well that it looks exactly the same as what they started with.

Probably worth noting, this survey was taken before 18.2 went live with a ChatGPT integration, image generation, etc.

Even with integrations, a lot of the automatic replies basically boil down to “yes, thanks” and “no thank you” to every text. It isn’t even like… A longer message. It’s just two or three words, tops. If I’m going to use AI to write my texts, it’s going to be for something longer than a “yes lol” text.

Agreed.

IMHO, the only truly useful thing is writing tools and Siri being able to query ChatGPT for complex questions instead of telling people to pull out their phone and search the web.

The stuff everyone was actually interested in is likely in 18.4. On-screen awareness, integration with installed apps, contextual replies, etc.

deleted by creator

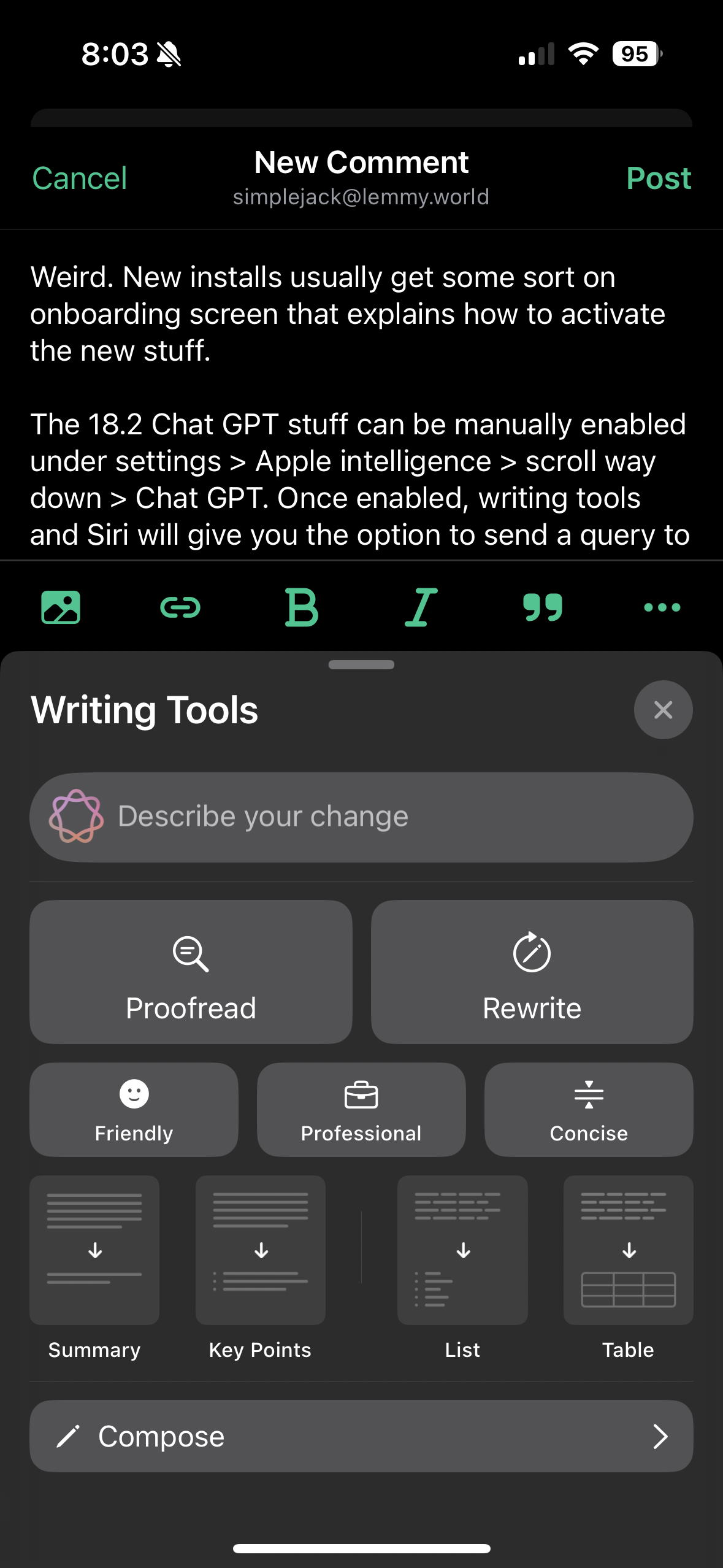

Weird. New installs usually get some sort on onboarding screen that explains how to activate the new stuff.

The 18.2 Chat GPT stuff can be manually enabled under settings > Apple intelligence > scroll way down > Chat GPT. Once enabled, writing tools and Siri will give you the option to send a query to ChatGPT instead of Apple’s model.

If Siri gets stumped, it will ask if you want to query GPT. Or you can just prompt it to Ask Chat GPT ______.

Writing tools has it buried under “compose” which is at the very bottom of the writing tools sheet.

Oh, good point

Much like many of us see no value in apple products.

I’m very much enjoying the GenMoji stuff. Being able to send or react with an emoji tailored to the situation is not useful, but it’s fun when you come up with a good one.

Also Siri is definitely more functional than it used to be. It understands when I correct myself or change my mind. Very handy. Still far from perfect though.

Also on iPad all the AI-driven handwriting cleanup and stuff is really nice when taking notes.

But otherwise it’s not super useful. I don’t like the notification summaries, they aren’t very good. Though they are sometimes hilarious. Like Ring being summarized as “Thirteen people at your door and gunshots heard.”

Yeah Siri being more understanding is pretty nice and has gotten me to actually use it more again, but beyond that none of it is super useful to me.

…I did enjoy finally getting to make “shrimp with cowboy hat” using Genmoji after Apple kept using that as an example, though

I made a “Sanderlanche” emoji for use when discussing Brandon Sanderson’s mastery of story structure. Reading every one of his books I reach a point where it feels like I have to frantically push to the end.

It’s just a book with a big vaguely-snowy wave coming out of it, but I like it.

deleted by creator

Gotta have iPhone 15 Pro, 16, or 16 Pro, unfortunately. Or an M-series iPad.

deleted by creator

Ah! Have you previously enabled Apple Intelligence? I think in 18.1 there was a queue thing to set it up. Could be that. I also vaguely remember there being something it had to download for the image stuff. Check in Settings, maybe? I was half asleep when I got it working so I don’t remember too well.

deleted by creator

Not surprising, I vaguely recall them saying they would roll out other language support over time.

This whole staged rollout very much smells of, “We were caught out by the industry shifting hard to AI and, despite including neural engines in our chips for a while, we weren’t ready.”

As a normal user, I don’t find Ai useful.

Like, anybody’s, for much of anything other than generating fever-dreams and Plex art.

code, tho.

Bash scripts, maybe but, it’s not necessary for me.

It’s almost like siri already does what people want it to, and anything beyond that is a waste of time and resources

Genmoji is a waste of space. The image generation is really bad (but then again, most of these platforms are). The writing tools are mediocre. About all that is moderately useful is that Siri seems a little better and processing commands.

If they want to start charging for this, I’m out.

If they want to start charging for this, I’m out.

I’m not sure why they would charge for it, most of it happens on-device.

True, but the RnD ain’t cheap. And, if everyone else starts charging (as I am sure they eventually will), Apple will follow.

That’s why Apple charges an arm and a leg for RAM.